The other day, a doc shared that she’s been using AI to help script videos for her YouTube channel. Another said it shaved hours off her reviews. Someone else even used it to map out a new business idea.

These stories are becoming more common. AI is here, and it’s helping physicians move faster, think bigger, and stretch beyond clinical work.

But with every “wow” moment comes a little voice in the back of your head: Wait… is this even legal?

Copyright headaches. Deepfakes. Patient privacy concerns. And let’s not forget the fine print no one reads on those AI platforms. It’s easy to feel excited and uneasy at the same time.

Here’s the good news: you can use AI confidently without putting your license, brand, or business at risk. You just need a few clear guidelines.

Below are five golden rules to help you use AI confidently while staying far away from lawsuits.

Disclaimer: While these are general suggestions, it’s important to conduct thorough research and due diligence when selecting AI tools. We do not endorse or promote any specific AI tools mentioned here. This article is for educational and informational purposes only. It is not intended to provide legal, financial, or clinical advice. Always comply with HIPAA and institutional policies. For any decisions that impact patient care or finances, consult a qualified professional.

Rule 1: Own (or Properly License) What You Put Into AI

U.S. copyright law protects original human-created content. That includes your photos, videos, audio, slides, and written material. If you feed content into an AI system that you do not own or license, you may be creating an infringing “derivative work,” even if the final output looks different.

The U.S. Copyright Office is actively studying how AI training and use interacts with copyright and has confirmed that traditional copyright principles still apply to AI. You can see this in their Copyright and Artificial Intelligence initiative and related policy guidance.

Risky examples:

- Uploading a professional headshot of a well-known physician speaker into an image generator to create “funny” variations for ads.

- Training or fine-tuning a model using question banks, board materials, journal PDFs, or textbook pages that are under license.

- Feeding patient photos, videos, or voicemails into a non-HIPAA-compliant AI tool, even for “internal testing.”

Safer practices:

- Use only content you created or content with clear licenses (public domain, CC0, or properly purchased stock).

- Use AI tools that allow you to opt out of model training on your inputs.

- Treat anything containing PHI as completely off-limits for consumer AI tools.

If you would not upload it to a public website under your name, do not upload it into an AI system.

Rule 2: Treat AI Output as a Draft, Then Add Your Expertise

One of the biggest legal questions right now is whether AI outputs can be copyrighted. The U.S. Copyright Office has repeatedly emphasized that human authorship is required for copyright protection, and that purely AI-generated works cannot be copyrighted under current law.

A federal appeals court recently agreed, upholding the Copyright Office’s refusal to register a work created entirely by an AI system without human involvement.

At the same time, the Copyright Office has clarified that works created with AI assistance can be protected if there is enough human creativity in how you edit, select, arrange, or modify the AI output. That is summarized in its 2025 report on AI and copyright and echoed by legal commentators. See, for example, this overview from Wiley: Generative AI in Focus: Copyright Office’s Latest Report.

What this means for you:

- Use AI to produce a first draft, not a finished product.

- Always revise, reorganize, and add your own analysis, examples, and clinical insight.

- Make sure your contributions are substantial enough that the work is clearly “yours” and not just the model’s.

If you want to build a long-term brand, course, or publication around your content, that level of human authorship is critical.

Rule 3: Do Not Use AI to Impersonate Real People’s Faces or Voices

Deepfake and impersonation laws are evolving quickly in the United States. Multiple states have passed laws targeting deceptive AI-generated audio or visual media, especially around elections and other sensitive contexts, and more are coming.

On top of that, the Federal Trade Commission (FTC) has specifically warned about the risks of AI voice cloning, which is being used in scams where criminals impersonate family members, bosses, or public figures. The FTC’s consumer alert, “Fighting back against harmful voice cloning”, highlights how realistic and dangerous these tools have become.

High-risk behaviors to avoid:

- Cloning a colleague’s or mentor’s voice with AI to “surprise” your audience.

- Generating an AI video that closely mimics a real person’s face, body, or mannerisms without consent.

- Using AI to make it appear that a patient, staff member, or another physician said or did something they did not.

Even if your intent is educational or humorous, you can run into state “deepfake” laws, right-of-publicity claims, or general misrepresentation issues.

Safer practices:

- Use AI tools that create clearly synthetic faces that are not based on real people.

- Use your own voice and likeness, or a professional actor who has signed a clear release that includes AI use.

- Clearly label any AI-generated media when it might confuse viewers.

If the content could make a reasonable person think it is a real person speaking or acting, you should have explicit written permission.

Rule 4: Read the AI Tool’s Terms Before You Rely on It

This is the least glamorous but most practical rule.

Different tools handle ownership, training, and privacy very differently. Some give you broad commercial rights to use outputs. Others keep more control or store more of your data. The FTC has repeatedly signaled that AI companies must honor their privacy and confidentiality commitments and may be required to delete models trained on unlawfully obtained data. See, for example, the FTC’s guidance to AI companies on privacy and confidentiality commitments: “AI companies: Uphold your privacy and confidentiality commitments”.

Before you commit to a tool for your business or content, check:

- Who owns the output? Do you have full commercial rights, or is it limited?

- Can the company train on your data? Is training opt in, opt out, or mandatory?

- How is your data stored and for how long? Especially important for anything remotely sensitive.

- Is the tool appropriate for healthcare? For anything involving PHI, you must use HIPAA-compliant tools and a Business Associate Agreement.

Speaking of HIPAA, the HIPAA Privacy Rule sets national standards for protecting individuals’ medical records and other identifiable health information. HHS summarizes these protections here: HIPAA Privacy: For Professionals.

If the tool’s terms are unclear or feel too broad, assume it is not the right place for any patient-related or sensitive information.

Rule 5: When in Doubt, Get Written Consent or Walk Away

Consent is your safest legal shield.

Even outside of HIPAA, U.S. law increasingly treats biometric identifiers like face and voice as sensitive personal data. Several states have biometric privacy laws, and new federal laws have begun to address non-consensual intimate deepfakes. For instance, the federal “Take It Down Act” signed in 2025 targets non-consensual intimate images, including AI-generated ones, and requires platforms to remove them quickly.

Within healthcare, HIPAA’s Privacy Rule requires covered entities to protect identifiable health information and limits when it can be used or disclosed without authorization.

High-risk situations where you should pause:

- You want to use a patient’s story, voice, or image in any AI-assisted content.

- You are considering uploading clinic recordings, operative videos, or WhatsApp voice notes from patients into an AI tool.

- You plan to generate or enhance content that includes staff, colleagues, or family members.

Best practices:

- Use written consent forms that explicitly mention AI tools, synthetic media, and online publication.

- When consent is not possible or practical, fully de-identify and avoid any image, voice, or video that could be linked back to a real person.

- If you have a lingering “this feels off” sensation, listen to it.

When in doubt, it is almost always safer and easier to create a de-identified example or use a purely fictional scenario.

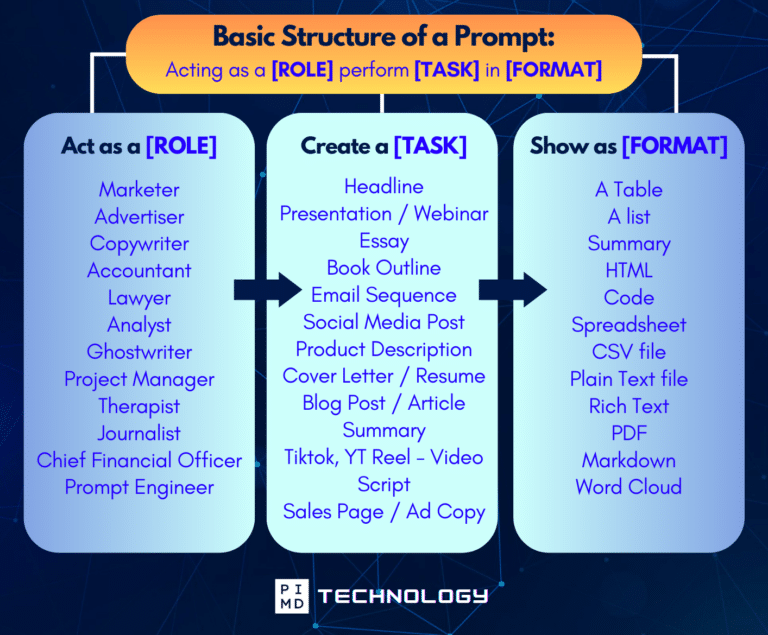

Unlock the Full Power of ChatGPT With This Copy-and-Paste Prompt Formula!

Download the Complete ChatGPT Cheat Sheet! Your go-to guide to writing better, faster prompts in seconds. Whether you’re crafting emails, social posts, or presentations, just follow the formula to get results instantly.

Save time. Get clarity. Create smarter.

Tips for AI Generated Images, Voice, Video, and Text

AI Image Generation

- Use descriptive prompts about concepts, not real people.

- Avoid recreating branded logos, sports team marks, or copyrighted characters.

- Confirm that the tool’s license allows commercial use if images will appear in a book, course, or paid program.

AI Voice Generation

- Never clone someone’s voice without written permission.

- Be careful using synthetic voices for any medical instruction. A human clinician should always review the final script and audio for accuracy and tone.

- Remember that voice can be treated as part of a person’s “likeness” for right-of-publicity claims.

AI Video Generation

- Do not feed patient or staff videos into consumer AI platforms.

- Avoid semi-realistic avatars that resemble specific individuals unless they have given consent.

- Label AI-generated educational videos clearly so learners understand what is real footage and what is simulated.

AI Text Generation

- Fact-check every clinical or financial claim. AI can hallucinate citations or invent studies.

- Add your own perspective, nuance, and examples so the content reflects your real expertise.

- Avoid pasting in large chunks of licensed text from journals or textbooks and asking AI to “rewrite” them.

Final Thoughts

AI can be a powerful tool for physicians. It can help you save time, generate ideas, streamline your work, and even open doors to new income streams. But like any powerful tool, it comes with sharp edges.

If you want to use AI without putting your license, reputation, or business at risk, stick to the five golden rules:

- Own or license what you put in

- Treat the output like a first draft and always add your expertise

- Don’t impersonate real faces or voices

- Read the terms and privacy policies

- When in doubt, ask for consent (or walk away)

And above all else, don’t skip the most important step: do your homework. Check everything. Verify facts. Cross-reference sources. Just because a tool is fast doesn’t mean you should be. Due diligence will always be your best protection.

You don’t have to fear AI. You just have to respect the process. Use it wisely, stay curious, and remember, your judgment is still your greatest asset.

Now go build something awesome!

By the way! To make AI even easier for you, we’ve put together something special:

Download The Physician’s Starter Guide to AI – a free, easy-to-digest resource that walks you through smart ways to integrate tools like ChatGPT into your professional and personal life. Whether you’re AI-curious or already experimenting, this guide will save you time, stress, and maybe even a little sanity.

Want more tips to sharpen your AI skills? Subscribe to our newsletter for exclusive insights and practical advice. You’ll also get access to our free AI resource page, packed with AI tools and tutorials to help you have more in life outside of medicine. Let’s make life easier, one prompt at a time. Make it happen!

Disclaimer: The information provided here is based on available public data and may not be entirely accurate or up-to-date. It’s recommended to contact the respective companies/individuals for detailed information on features, pricing, and availability. All screenshots are used under the principles of fair use for editorial, educational, or commentary purposes. All trademarks and copyrights belong to their respective owners.

If you want more content like this, make sure you subscribe to our newsletter to get updates on the latest trends for AI, tech, and so much more.

Further Reading

The post How to Use AI and Not Get Sued (2025) appeared first on Passive Income MD.